Preparing the publicity plan for Made by Humans, my recent book about data, artificial intelligence and ethics, I made one request of my publisher: no “women in technology” panels.

I have never liked drawing attention to the fact that I’m a woman in technology. I don’t want the most prominent fact about me to be my gender rather than my expertise or my experience, or the impact of my work. In male-dominated settings, the last thing I want to feel is that I’m only there because of my gender, or that it’s the first thing people notice about me. I don’t like that being described as a “woman in tech” flattens my identity, makes gender the defining wrapper around my experience, irrespective of race, class, education, family history, political beliefs and all the other hows and whys that make life more complicated. These are some of the intellectual reasons.

A more immediate reason why I haven’t wanted to be known for talking about gender is because I’m still young and I want to have a career in this industry. I like what I do. I work on a range of issues related to data sharing and use. At the moment I lead a technical team designing data standards and software to support consumers sharing data on their own terms with organisations they trust. Data underpins so much of the current interest in AI, so it’s a good time to be working on projects trying to make it useful and learning to understand its limitations.

It’s also true that most of the time my clients, employers, team members, fellow panellists and advocates are male. Most of the time they’re excellent people. I don’t like making them feel uncomfortable. People generally don’t like feeling as if they’re not being fair or that in some structural ways, the world isn’t fair. Avoiding making people uncomfortable — particularly those who decide whether you’ll be invited to speak at a conference, or hired, or promoted, or put forward for a new exciting opportunity — is still a sensible career move.

So I have long assumed that the best way to talk about gender and be a strong advocate for women is from a distance, at the pinnacle of my career, when I’m the one in the position of power. What a strange pact to make with myself: to effect change in the technology industry, to become a female leader, I just need to stay silent on issues affecting women in the industry. In thirty years’ time ask me what it was like and, boy, will I have some stories to tell — and some really good suggestions!

But I don’t think I can wait any longer. My sense of how to navigate the world as a woman and still get ahead was shaken in 2018. In the media, I watched as women who tried to keep their heads down and avoid making a scene still found themselves branded attention-seekers, deviants, villains — even though it was men behaving badly who were on trial. I published a book in a technology field and tried diligently to avoid discussing gender, only to be dismayed by the influence gender had on how it was received, who read it, who saw value in it.

At events, it was almost always women who approached me to say they enjoyed the panel and to ask follow-up questions. The younger the men, the more likely they were to want to argue with and dismiss me. At the book signings that followed, while my queues mainly comprised women, their requests for dedications were almost always to sons and nephews, brothers and husbands. I smiled politely through comments about being on panels specifically to add “a woman’s perspective.” I looked past the male panellists who interrupted me, repeated me, who reached out to touch me while they made their point.

I was also pregnant. By the time you read this I will have given birth to a baby girl. It is hard to describe how much this has recast what I thought was the right and wrong way to make it as a woman. She is the daughter of two intelligent parents who are passionate about technology. She is currently a ferocious and unapologetic wriggler who takes up every inch of available space and demands our attention, oblivious to the outside world. I don’t know her yet but I already admire her for that.

I do not want her to grow to believe that in order to successfully navigate the world she must make herself small, put up with poor treatment, apologise for taking up space that is rightfully hers. I don’t know how I would look her in the eye when she realises that this way of being does not help her.

While it can seem like we’re only now talking about gender issues in the tech sector, the discussion has been going on for decades. What makes it wearying is how many of the problems raised years ago remain the same.

In 1983 — before I was born — female graduate students and research staff from the computer science and artificial intelligence laboratories at MIT published a vivid account of how a hostile environment to women in their labs impeded academic equality. They described bullying, sexual harassment and negative comments explicitly and implicitly based on gender. They described being overlooked for their technical expertise, and ignored and interrupted when they tried to offer that expertise. The authors made five pages of recommendations aimed at addressing conscious and unconscious differences in attitudes towards women in the industry. “Responsibility for change rests with the entire community,” they wrote, “not just the women.”

It’s clear that for there to be serious improvements in the numbers of girls and women in technology, cultural attitudes towards what girls are interested in and capable of will have to change. We have to want them to change. But I’m not sure that we want that as a society. In Australia, declining participation rates among girls studying advanced maths and science subjects in high school continue to be a cause for concern. The reasons for the decline remain broadly the same as they were twenty years ago, when Jane Margolis and Allan Fisher interviewed more than a hundred female and male computer science students at Carnegie Mellon University, home to one of the top computer science departments in the United States, as part of their seminal study of gender barriers facing women entering the profession.

In Unlocking the Clubhouse: Women in Computing, Margolis and Fisher charted how computing was claimed as male territory and made hostile for girls and young women. Throughout primary and high school, the curriculum, teachers’ expectations and parental attitudes were shaped around pathways that assumed computers were for boys. Even where women did persist with an interest in computers into college, Margolis and Fisher observed that by the time they graduated “most… faced a technical culture whose values don’t match their own, and ha[d] encountered a variety of discouraging experiences with teachers, peers and curriculum.”

Back then, as now, barriers persisted in the workforce. In the mid 2000s, The Athena Factor report on female scientists and technologists working in forty-three global companies in seven countries concluded that while 41 per cent of employees in technical roles in those companies were women, over time 52 per cent of them would quit their jobs. The key reasons for quitting: exclusionary, predatory workplace cultures; isolation, often as the sole woman on a technical team; and stalled career pathways that saw women moved sideways into support or executor roles. “Discrimination,” Meg Urry, astrophysicist and former chair of the Yale physics department, wrote in the Washington Post in 2005, “isn’t a thunderbolt, it isn’t an abrupt slap in the face. It’s the slow drumbeat of being underappreciated, feeling uncomfortable and encountering roadblocks along the path to success.”

These themes emerge in thousands of books, white papers, op-eds and articles: women leave the tech industry because they’re isolated, because they’re ignored, because they’re treated unfairly, underpaid and unable to advance. These problems persist, and every year there are new headlines concerning gender discrimination at every level of the industry. In 2018, female employees working for Google in California filed a class-action lawsuit alleging the multinational tech company systematically paid women less for doing similar work to men, while “segregating” technically qualified women into lower-paying, non-technical career tracks. The same year, 20,000 employees and contractors walked out at offices around the world to protest sexual harassment at the company after news broke that Andy Rubin, the “father of Android,” had been paid US$90 million to leave Google quietly amid credible accusations of sexual harassment.

In every tech organisation I have worked in, these kinds of dynamics remain uncomfortably familiar. There are more women than men in administrative roles, in project coordination, and in front-end development and design roles, although some of these women began in the industry with technical degrees and entry-level technical roles. Gender-related salary gaps persist, even within the same technical roles and leadership positions. HR processes, designed to create a level playing field, still inadvertently reward those who complain the loudest, who demand more money, who tend more often than not to be men. It remains difficult for women to pursue bullying and harassment complaints, particularly against powerful harassers, without career consequences.

Writing about gender in technology, it’s easy to fall into what computer engineer Erica Joy Baker describes as “colourless diversity.” A 2018 study by the Pew Research Center noted that while 74 per cent of women in computer jobs in the United States reported experiencing workplace discrimination, 62 per cent of African-American employees also reported racially motivated discrimination. Women of colour in tech find themselves doubly affected.

I am reflected in the statistics around gender: I am affected by them, perpetuate them, benefit from them. I have never successfully negotiated a meaningful salary increase or bonus. I have watched as male successors in my own former roles are paid more than I was to do the same job. I have managed teams where my male employees earned more than I did. These kinds of things happen both because I don’t speak up and because organisations let it happen.

I know this because as a manager, I let it happen too. I get requests for higher pay and promotions more frequently from men. Even if they’re rude or unreasonable or completely delusional, I take their sense of being undervalued seriously. I want to make them happy. The women I have managed rarely express their outrage or frustration so openly. They’re slower to escalate concerns and less likely to threaten to leave. I also know that as a white woman in tech, despite these experiences, I’m still statistically likely to be paid more and receive more opportunities than any of my non-white colleagues, male or female or non-binary.

Years of focus on bringing more women into technology roles have created a sense of being eagerly sought out and highly valued. But workplace dynamics and cultural attitudes still persist in making women of all ages, ethnicities, sexualities, once they’re in the sector, feel undervalued and ignored. It’s a strange paradox to live within. On the basis of our gender we’re highly visible (more visible if we’re white and heterosexual). As experts, as contributors to those technologies, it can still feel like we’re hidden in plain sight.

In August 2018, WIRED magazine asked: “AI is the future: but where are the women?” Working with start-up Element AI, it estimated that only around 12 per cent of leading machine-learning researchers in AI were women. Concerns about a lack of diversity in computer science have taken on new urgency, partly driven by growing awareness of how human designers influence the systems making decisions about our lives. In its article, WIRED outlined a growing number of programs and scholarships aimed at increasing gender representation in grad schools and industry, at conferences and workshops, while concluding that “few people in AI expect the proportion of women or ethnic minorities to grow swiftly.”

For women, the line between “inside” and “outside” the tech sector — and therefore what contributions are perceived as valuable — keeps moving. While WIRED focused on a narrow set of skills being brought to applied AI — machine-learning researchers — it nonetheless recast these skills as the only contributions worth counting towards AI’s impact. The “people whose work underpins the vision of AI,” the “group charting society’s future” in areas like facial recognition, driverless cars and crime prediction, didn’t include the product owners, user-experience designers, ethnographers, front-end developers, the sociologists and anthropologists, subject-matter experts or the customer-relationship managers who work alongside machine-learning researchers on the same applied projects.

Many of these roles evolved explicitly to create the connection between a system and society, between its intended use and unintended consequences. They are roles that typically encourage critical thinking, empathy, seeking out of diverse perspectives — all skills that leaders in the tech industry have identified as critical to the success of technology projects. The proportions of women in these kinds of roles tends to be higher, both by choice and perhaps, as the female employees at Google alleged in their 2018 lawsuit, by design. Yet, even as we wrestle in public debates with the impact the design of a technical system has on humans and society, our gaze keeps sliding over these people — already in the industry — who are explicitly tasked with addressing these problems, including many women. If in public commentary we don’t see or count their contributions as part of the development of AI, then their contributions don’t get valued as part of teams developing AI.

I want more diverse women to become machine-learning researchers. I also want the contributions women already make in a range of roles to be properly recognised. What matters isn’t so much getting more women into a narrowly defined set of technical roles, the boundaries of which are defined by the existing occupants (who are overwhelmingly male). This is still a miserably myopic approach to contributions that “count” in tech. What matters is that the industry evolves to define itself according to the wide set of perspectives, the rich range of skills and expertise, that go into making technology work for humans.

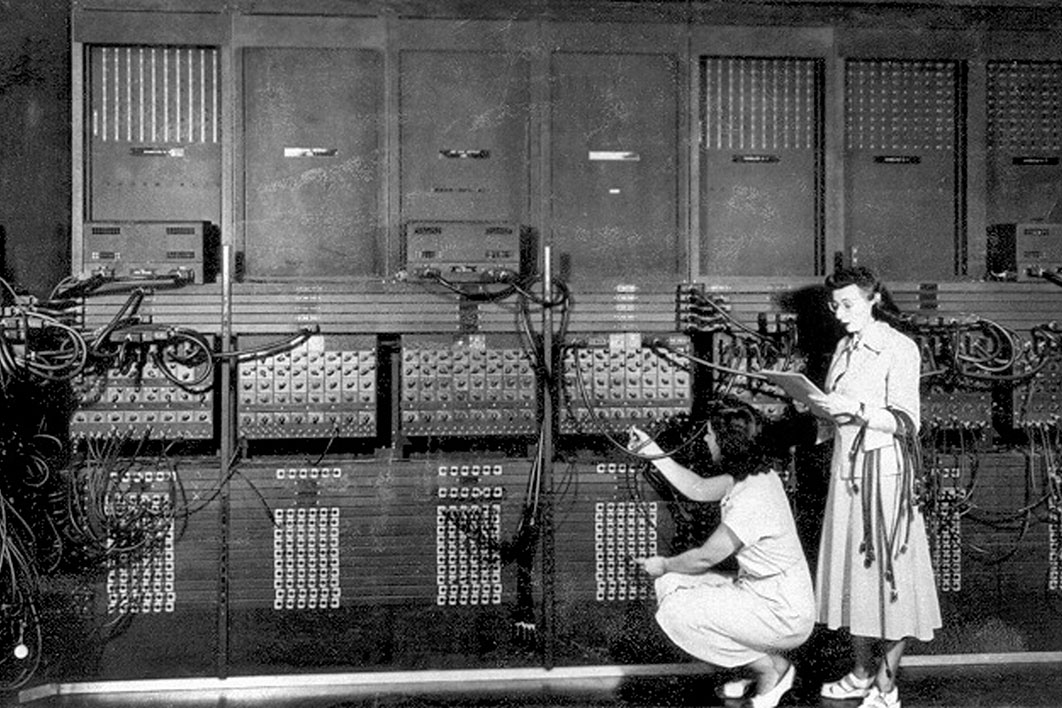

What I really don’t want to see, as the relationship between technical systems and humans takes on greater status in the industry, is women being pushed out of these important roles. It would be the continuation of a historical pattern in the tech industry. As technology historians including Marie Hicks have shown, despite this persistent sense that women “just don’t like computing,” women were once the largest trained workforce in the computing industry. They calculated ballistics and space-travel trajectories; they programmed the large, expensive electromechanical computers crunching data for government departments and commercial companies while being paid about the same as secretaries and typists. But as the value of computing grew, women were squeezed out, sidelined, overtaken by male colleagues. Once considered a “soft” profession, women’s work, not “technical” as defined by the men who occupied other technical roles, computer programming eventually became highly paid, prized… and male-dominated.

I worry that we’re about to do it again, this time in ethical AI. Ethical AI is in the process of transitioning from being a “soft” topic for people more concerned with humans than computers, and treated by the technology industry primarily as a side project, to being a mainstream focus. Experts in ethical AI are a hot commodity. KPMG recently declared “AI ethicists” one of the most in-demand hires for technology companies of 2019. There’s a reason a book about ethical AI like Made by Humans got picked up by a mainstream publisher.

A significant proportion of critical research fuelling interest in the impacts of AI on humans and society has been driven by women: as computer scientists, mathematicians, journalists, anthropologists, sociologists, lawyers, non-profit leaders and policymakers. MIT researcher Joy Buolamwini’s work on bias in facial recognition systems broke open the public debate about whether facial recognition technology is yet fit for purpose. Julia Angwin’s team at ProPublica investigated bias in computer programs being used as aids in criminal sentencing decisions, and exposed competing, incompatible definitions of algorithmic fairness. Data scientist Cathy O’Neil’s book Weapons of Math Destruction was one of the first big mainstream books to question whether probabilistic systems were as flawless as they appeared.

There are many prominent women in AI ethics: Kate Crawford and Meredith Whittaker, co-directors of the AI Now Institute in New York and long-term scholars of issues of bias and human practice with data; Margaret Mitchell, a senior machine-learning researcher at Google, well known in the industry for her work in natural-language processing, who has drawn attention to issues of bias in large corpuses of text used to train systems in speech and human interaction; Shira Mitchell, quantifying fairness models in machine learning; danah boyd; Timnit Gebru; Virginia Eubanks; Laura Montoya; Safiya Umoja Noble; Rachel Coldicutt; Emily Bender; Natalie Schluter. In early 2019, when TOPBOTS, the US-based strategy and research firm influential among companies investing in applied AI, summarised its top ten “breakthrough” research papers in AI ethics, more than half of the authors were women.

Which is why it’s been disconcerting to see, as interest in and funding for AI ethics grows, the gender distribution on panels discussing ethics, in organisations set up explicitly to consider ethical AI, start to skew towards male-dominated again. It’s not just that more men are taking an interest in ethical AI; this is a reflection of its importance, which is something to be celebrated. What troubles me is that what “ethical AI” encompasses often seems to end up in these conversations being redefined as a narrow set of technical approaches that can be applied to existing, male-dominated professions.

Even as the women in these professions — and many of the influential women I just cited are computer scientists and machine-learning researchers — are doing pathbreaking work on the limitations (as well as the strengths) of technical methods quantifying bias and articulating notions of “fairness,” these technical interpretations of ethics become the sole lens through which “ethical AI” is commoditised.

Ethical AI is thus recast as a “new,” previously unconsidered technical problem to be solved, and solved by men. I have been consistently unnerved to find myself talking to academics and institutes planning research investments in ethical AI who don’t know who Joy Buolamwini is, or Kate Crawford, or Shira Mitchell. And I worry that user researchers, designers, anthropologists, theorists — many of them women — whose work in the industry has for years involved marrying the choices made by engineers in designing systems with the humans affected by them, will end up being pushed out as contributors towards “ethical AI.” I’m afraid we’ll just keep finding new ways to render women in the industry invisible. I worry that my own contributions will be invisible.

Every woman in technology can tell you a story about invisibility. At a workshop in 2018, I watched a senior, well-respected female colleague, who was supposed to be leading the discussion, get repeatedly interrupted and ignored. She finally broke into the conversation to say, mystified, as though she couldn’t quite trust what was in front of her own eyes and ears, “Didn’t I just say that? Did anyone hear me say that?” What stayed with me was the way she asked the group the question: she wasn’t angry, just… puzzled. As though perhaps the problem wasn’t that people weren’t listening to her, but that there was some issue with the sound in the room itself, or with her. As if perhaps the problem was that she was a ghost who couldn’t be heard.

I’ve listened to men repurpose my proposals as their own — not intentionally or maliciously, just not realising that they had heard me say the same thing seconds earlier. I’ve watched as men on projects I’ve led attribute our success to their own contributions. It is unsettling and strange to be both visible as a woman in tech, and yet invisible as a contributor to tech. For women of colour, invisibility is doubly felt. Even at conferences and on panels dedicated explicitly to the experiences of women in tech, most panellists will be white women in the industry.

Perhaps nobody has captured the stark influence of gender on visibility more vividly than the late US mathematician Ben Barres. In his essay “Does Gender Matter?” Barres recounts his own experiences as a graduate student at MIT, entering the school as a woman before transitioning to a man. At the time, Barres was responding to comments made by male academics, including Harvard president Lawrence Summers and psychologist Steven Pinker, asserting biological differences to explain the low numbers of women in maths and science. In the essay, Barres is careful not to attribute undue significance to his own experiences. But sometimes anecdotes reveal as much as statistics do.

Barres described how, as a woman and as the only person to solve a difficult maths problem in a large class mainly made up of men, she was told by the professor that “my boyfriend must have solved it for me.”After she changed sex, a faculty member was heard to say that “Ben Barres gave a great seminar today, but then his work is much better than his sister’s.” Confronting innate sex differences head on, Barres described the intensive cognitive testing he underwent before and after transitioning and the differences he observed: increased spatial abilities as a man; the ability to cry more easily as a woman. But by far, he wrote, the main difference he noticed on transitioning to being a man was “that people who don’t know I am transgendered treat me with much more respect. I can even complete a whole sentence without being interrupted by a man.”

This is the first time I’ve written publicly about my own experiences as a woman in technology. Up till now I’ve played by the rules. I have spent years being polite in the face of interruptions, snubs, harassment. I know instinctively how to communicate my opinion in a way that won’t upset anyone and have tended to approach talking about sexual harassment and discrimination on the basis that what matters most is not upsetting anyone. I have focused on finding the “right words,” the “right time” to talk about gender issues.

But I have observed the ways in which gender (and race, and sexuality) continues to shape who is in power and whose contributions get counted in the tech industry, in ethical AI. Even when it comes to the “right” way to talk about gender issues, I can’t help but notice how different “right” looks for men as compared with women, and for white women as compared with women of colour. Increasingly, men are publicly identifying as champions of change for women: they sign panel pledges, join initiatives pursuing gender equality, demand equal representation, and it’s seen as a career booster. Women demand change too forcefully and are labelled bullies, drama queens, reprimanded for their over-inflated sense of self-importance. Women of colour are vilified and hunted. And women who stay silent in the face of all this, as I have, implicitly endorse the status quo, often finding themselves swallowed up by it anyway. If my daughter grows up to be interested in tech, these are not the experiences I want her to have. I want her to be unafraid to speak up, to demand our attention. I want her to be seen, and I want her to speak up for others.

If there is no “right” way as a woman to speak about gender issues — if there is no “right” way for a woman to take up space, to take credit — then silence won’t serve me or save me either. The only way forward from here is to start speaking. •

This essay is republished from GriffithReview 64: The New Disruptors, edited by Ashley Hay.